by Warren Gaebel

| Nov 06, 2012

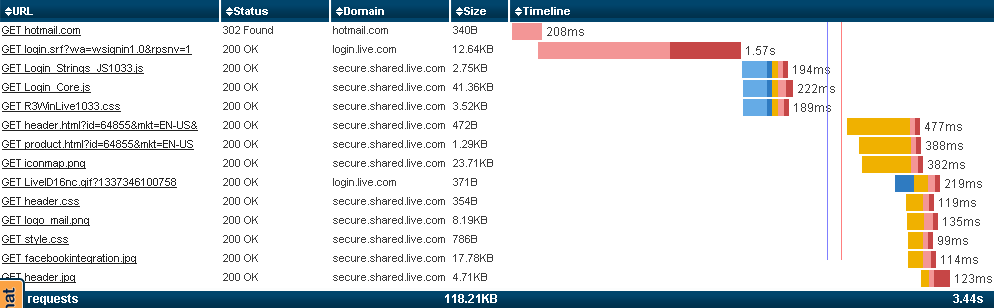

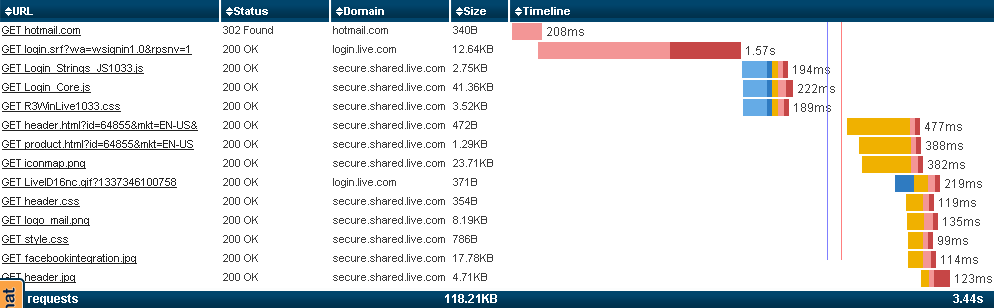

So far, this series presented the details of all the things that happen after a user clicks a link to retrieve a new web page. It purported to follow the details chronologically, but in truth we know that cannot be true. If all the processes we discussed were to happen consecutively, the web page would take so long to load that even the most long suffering would give up and go elsewhere.Fortunately, web browsers download more than one component at a time. We can see this graphically in waterfall charts. If all downloads were serial, the graph would have a perfect staircase design with each step beginning where the previous one left off. Instead, we see stairs underneath stairs, which indicates concurrency between the two steps. [Use the Paid Monitor Page Load tool to see for yourself.]

So far, this series presented the details of all the things that happen after a user clicks a link to retrieve a new web page. It purported to follow the details chronologically, but in truth we know that cannot be true. If all the processes we discussed were to happen consecutively, the web page would take so long to load that even the most long suffering would give up and go elsewhere.Fortunately, web browsers download more than one component at a time. We can see this graphically in waterfall charts. If all downloads were serial, the graph would have a perfect staircase design with each step beginning where the previous one left off. Instead, we see stairs underneath stairs, which indicates concurrency between the two steps. [Use the Paid Monitor Page Load tool to see for yourself.]

The more we can slide those stairs underneath each other, the more concurrency we achieve and, more importantly, the sooner the page is fully loaded. This episode discusses techniques for making those stairs slide under each other.

One Component At A Time?

If the user’s click downloads the HTML for the web page, then waits for the HTML to arrive, then downloads a stylesheet, then waits for the stylesheet to arrive, then downloads an image, then waits for the image to arrive, and so on, we would see a perfect staircase in our waterfall chart (the opposite of what we want). Loading the new web page will take forever (well, there may be a bit of hyperbole there).

Fortunately, modern web browsers request multiple components at a time, which gives us some level of concurrency. The question is: Is this concurrency enough? In many cases, the answer is a clear NO.

Performance Consideration:

Should components be separate downloads or should they be inlined into the main <html> file? The answer’s not as easy as one might think.

Inlining eliminates all but one connection. Since each connection has an associated performance penalty, this minimizes those penalties.

Example: I created a 1,000,000 byte document with no JavaScript, no CSS, no images, and only the <html>, <head>, <title>, and <body> tags (and their associated close tags). The content was all the articles I’ve ever written for the Monitor.Us blog. With a primed cache, the page loaded in under half a second. With an empty cache and compression on, the page loaded in under two seconds. That’s not bad! However, the result is influenced by bandwidth, distance to server, and the user’s browser choice.

Conclusion: If this very large file can download this fast, smaller files that inline everything may offer better performance than the same file split into separately downloaded component files.

Of course, this approach eliminates caching, which can be an important performance tool. This is an important tradeoff that cannot be ignored. The only way to know which is better for your web page is to try it both ways and measure the results. This will take a few minutes, but the result may surprise you.

JavaScript and CSS block concurrency. When they are being downloaded, parsed, and executed, existing downloads will continue, but anything following has to wait (again, the opposite of what we want).

Performance Consideration:

Move downloading and execution of all scripts to the bottom of the <html> if they:

- must execute before the document becomes interactive, and

- do not write content to the document (or write it at the bottom).

Images are better behaved. The download request is sent, then the browser engine’s parser continues on just as if the image had already been received. Downloading images is almost truly concurrent.

I say “almost” because the browser and the server both impose limits on how many requests can be outstanding at one time. The lower that number, the closer we get to serial processing. The higher it is, the more concurrent the processing. The standards say the limit should be two, but many browsers and servers set the default higher than that and/or allow us to change it.

Performance Consideration:

Serve components from multiple domains. The browser and server impose limits on the number of outstanding requests, but those limits are

per domain. If your components come from two domains, each domain has its own limit, which means you can double the number of concurrent downloads. Using three domains will triple the limit, etc. However, as

Tenni Theurer and Steve Souders demonstrate, this technique can actually make performance worse if it’s carried too far. Sticking to two or three domains may be best. Measure it yourself to be sure.

Pretend Concurrency

Three techniques,

- the two timer functions,

- the defer and async attributes, and

- JavaScripts triggered by onLoad,

are sometimes said to allow concurrency. This is not quite true – JavaScript execution is single-threaded, so concurrency of execution is impossible. However, the benefits of these techniques are very real and should not be ignored.

These three techniques move downloading and/or processing to a more appropriate time. For example, instead of downloading and executing the JavaScript code when the parser gets to it, we can postpone downloading/execution to let other, more important, code go first.

Why do we bother with these techniques? Because we want to postpone downloading and/or execution of less important code until after the web page is interactive. The needed-now code creates whatever is necessary to make the web page interactive and also includes whatever we expect the user to need in the first second or so. The needed-soon code is likely to be needed shortly after that. The needed-now code should not be postponed. The needed-soon code can be postponed until the webpage is interactive (i.e., when onLoad fires).

Defer & Async:

When the parser encounters a script, it normally waits while the script is downloaded and executed. This blocks the parser, the layout engine, and the rendering engine, which is the serial behaviour we’re trying to avoid.

If the script tag includes a defer attribute, the script is immediately downloaded while the parser continues its work. The downloading is concurrent. The script is executed after the parser finishes and before onLoad fires. Execution is not concurrent – it is merely being done at a more convenient time.

Our key takeaway: Scripts with the defer attribute download concurrently, but they do not execute concurrently and they block onLoad.

Performance Consideration:

Use defer without async for all scripts that:

- must execute before the document becomes interactive,

- do not write content to the document, and

- are of a lower priority than the following scripts.

The async attribute is almost the same as the defer attribute. Both begin downloading immediately and asynchronously, which lets the parser get on with what it’s doing. The async attribute, though, does not wait for the parser to finish. It executes the downloaded code at the first opportunity, which can block other scripts from executing. [Remember: Since JavaScript can only do one thing at a time, all scripts are serialized.]

Performance Consideration:

Use defer and async together for all scripts that:

- must execute before the document becomes interactive,

- do not write content to the document, and

- do not need to be synchronized with other scripts.

The defer attribute guarantees that the scripts are executed in order (at least, that’s what the standard says). The async attribute will likely cause the scripts to be executed out of order (because they’re executed as soon as possible after they’re downloaded, and their download times can be quite different).

The defer attribute works in most browsers. The async attribute works in newer browsers. If we want to use async, we should use both async and defer. Browsers that understand async will ignore defer if both are used. Browsers that don’t understand async will ignore it, but will use the recognizable defer attribute.

Timer Functions:

JavaScript’s two timer functions, setTimeout() and setInterval(), can postpone JavaScript execution for a specified amount of time. This is not true concurrency; it merely causes execution to occur at a more opportune time. [Remember: JavaScript always executes serially.]

If the timing interval expires before onLoad fires, the script’s execution will block onLoad. If the timing interval expires after onLoad fires, the script’s execution will not block onLoad. Since the programmer doesn’t know the number of milliseconds remaining before onLoad, he cannot say for certain whether execution will block onLoad or not.

setTimeout() can be used to give priority to the scripts that follow by setting the delay to 1 ms. Because of the way timers work, a 1 ms delay will allow all queued JavaScripts to execute before this one. To be more precise, it allows all currently-queued scripts and all scripts that queue within the next 1 ms to execute first.

JavaScript-Triggered onLoad:

<body onLoad=”myNeededSoonScripts();”> allows us to postpone script execution until after onLoad fires, which is the point at which the web page is fully interactive. Although this technique is not true concurrency, it does make the web page available sooner, which is one of our key performance objectives.

If myNeededSoonScripts() changes the appearance of the web page, the end-user will see that change. However, if it happens in a part of the web page not currently being viewed and it doesn’t cause a reflow, he might not even notice.

If the end-user notices the change, this technique is not as effective as it can be. At the least, it can distract him from what he’s doing. Worse, it can cause the page to jump around (the result of reflows), which means the user cannot use the web page at that time.

Performance Consideration:

Postpone downloading and execution until after onLoad fires for all scripts that:

- can execute while the user is reading the webpage and deciding what to do next (a few seconds)

- will not distract or delay the user when they execute.

Real Concurrency

Although JavaScript execution can never be concurrent (it’s a single-threaded language), downloading can be. That’s what iframes and XMLHttpRequests are for.

iframes:

Iframes are documents inside documents. The inner document loads concurrently with the outer document. Just what the doctor ordered.

Gotcha #1: The iframe and the main document share the same connection pool, so we haven’t increased the maximum allowable number of outstanding requests. If that’s our bottleneck, the iframe won’t help.

Gotcha #2: The outer document’s onLoad will not fire until after the inner document’s onLoad fires. So we’ve achieved concurrency, which may have helped somewhat, but onLoad is still being delayed.

Several techniques have been proposed to get around the onLoad-blocking problem. The “dynamic async iframe” technique, presented by Marcus Westin and Martin Hunt at Velocity 2010, works best if the content of the iframe is of equal importance to the content of the outer document. Aaron Peters provides this code, which I have simplified to make the main points more salient:

//Notice the onLoad. The inner document is blank until

//AFTER its onLoad fires. And its onload fires almost

//immediately because it's blank. Cool!

innerDocText =

'<body onLoad="' +

'var d = document;' +

'd.getElementsByTagName(\'head\')[0].' +

'appendChild(d.createElement(\'script\')).src' +

'=\'url-to-js-file\'"></body>';

var myIframe = document.createElement('iframe');

document.body.appendChild(myIframe),

innerDoc = myIframe.contentWindow.document;

innerDoc.open();

innerDoc.write(innerDocText);

innerDoc.close(); //inner document's onLoad event happens here

Aaron’s discussion/explanation is top-notch, so click on the link above to learn more.

When the content of the iframe is less important than the content of the outer document, Aaron’s “iframe after onLoad” technique may suit your purposes better. It does not download the iframe’s contents until after the outer document’s onLoad fires.

The “dynamic async iframe” technique (above) starts the download immediately, which makes the iframe and the outer document compete for the available connections. With the “iframe after onLoad” technique, the outer document’s onLoad will fire sooner and the inner document’s onLoad will fire later.

XMLHttpRequest

It’s name implies that it’s for XML only, but it can be used to download anything – XML, JSON, HTTP, JavaScript, CSS, text, images, and anything else you can imagine. The most important point here is that the download is concurrent with everything else.

This technique is typically used after onLoad to dynamically download content, scripts, or images, so whether or not it blocks onLoad is irrelevant. It can be triggered by any event, including onLoad, timers, and mouse clicks.

Performance Consideration:

Use XMLHttpRequest() to load dynamic content after onLoad fires. Provide a progress indicator to let the user know that something is still happening and to give him an idea of how long it will take.

Caveat: Size and position the container before onLoad fires so the web page won’t jump around when the content is finally available.

The New Kid on the Block

Something new is in the works. Its name is SPDY. It deserves a mention here because it’s making inroads into the web world, and if/when it is fully adopted, it renders some of the above discussion obsolete.

SPDY stands for, well, um, I don’t know. I’ve searched and I’ve searched, but if it’s an abbreviation for something, I still don’t know what it is. Until I find out, I’m going to say it’s short for Superior-Performance Downloading from Yoda because of the rumour that Yoda helped create it. Maybe it’ll catch on.

SPDY creates a connection, requests all the components at the earliest possible time, then lets the server send them one after another on the same connection. Rudimentary prioritization is possible, so if the server has several components ready to go, it can send the higher priority ones first.

Although SPDY may not be the same in a year or two, it seems destined to be a driving force in how web components are retrieved from a server. Why? Because it’s faster.

We’re not there yet, but we’re getting there. Yoda would be proud.

But I Want Multithreaded Scripting

So, did I repeat “JavaScript has only one thread, so you can’t execute it concurrently” enough times? No? One more time then – JavaScript is single-threaded. You can’t get around that.

Or can you?

If you truly want multithreading and you’re willing to pay the price (race conditions, non-atomic operations, convoluted code that no one else understands, etc.), there may be a way. I’m not recommending this, but for those who want to play…

I created this code in Chrome Version “22.0.1229.94 m”. If you want it to work in a different browser, you may have to fix the DOM traversal. And even if you get it working in some other browser, there’s no guarantee that the other browser will give the same result. [Note, too, that it’s merely a proof of concept – real production code needs a lot more than this.]

<html><head><script type="text/JavaScript">

function addContent() {

if (window.parent===window.self) {

setInterval ('document.getElementById("myIframe").contentDocument.body.innerHTML += "O"', 5000);

}else{

setInterval ('document.body.innerHTML += "I";', 7000);

}

}

</script></head><body onLoad="addContent();">

<iframe id="myIframe" src="thisFile.html"></iframe>

</body></html>

This file (thisFile.html) creates an iframe that loads the same document a second time. [Your browser may or may not protect you from the infinite recursion.] In both the outer and the inner documents, addContent() will be executed after the document is fully loaded. The outer document appends O to the output. The inner document appends I. In both cases the output goes to the inner document, and the outer document remains blank.

When I run this in my version of Chrome, the output is a bunch of I’s and O’s intermingled. And there we have a simulated, somewhat convoluted, form of multithreading. It’s not true multithreading, but it’s a good starting point if you want to play with it.

In case you’re wondering, addContent() knows it’s the outer document if window.parent and window.self are the same. In other words, the outer document is its own parent. [If you like this confusing relationship, you’ll love Ray Stevens’ I’m My Own Grandpa.]

Conclusion

Web pages consist of multiple components. Performance can be enhanced by downloading more than one component at a time.

Downloading can be serial with parsing, concurrent with parsing, delayed slightly, delayed until after onLoad, or delayed even longer.

Script execution can be serial with parsing, delayed slightly during parsing, delayed until the parser is finished, delayed until onLoad, or delayed any number of milliseconds.

Just to keep the record straight, Yoda and Ray Stevens really have nothing to do with website performance.

Post Tagged with

So far, this series presented the details of all the things that happen after a user clicks a link to retrieve a new web page. It purported to follow the details chronologically, but in truth we know that cannot be true. If all the processes we discussed were to happen consecutively, the web page would take so long to load that even the most long suffering would give up and go elsewhere.Fortunately, web browsers download more than one component at a time. We can see this graphically in waterfall charts. If all downloads were serial, the graph would have a perfect staircase design with each step beginning where the previous one left off. Instead, we see stairs underneath stairs, which indicates concurrency between the two steps. [Use the Paid Monitor Page Load tool to see for yourself.]

So far, this series presented the details of all the things that happen after a user clicks a link to retrieve a new web page. It purported to follow the details chronologically, but in truth we know that cannot be true. If all the processes we discussed were to happen consecutively, the web page would take so long to load that even the most long suffering would give up and go elsewhere.Fortunately, web browsers download more than one component at a time. We can see this graphically in waterfall charts. If all downloads were serial, the graph would have a perfect staircase design with each step beginning where the previous one left off. Instead, we see stairs underneath stairs, which indicates concurrency between the two steps. [Use the Paid Monitor Page Load tool to see for yourself.]